The whole time everyone has been freaking out about AI I’ve been quietly enjoying just this fact. Like “neat, this place triggers my fear response”, “neat, advanced text prediction triggers my ‘talking to person’ response.”

I wish everyone was as aware of the response systems they have.

It also triggers in tech-bros the “I need to worship this shiny new thing like it’s literally a deity sent from heaven to grace all mankind” response.

In a robotics lab where I once worked, they used to have a large industrial robot arm with a binocular vision platform mounted on it. It used the two cameras to track an objects position in 3 dimensional space and stay a set distance from the object.

It worked the way our eyes worked, adjusting the pan and tilt of the cameras quickly for small movements and adjusting the pan and tilt of the platform and position of the arm to follow larger movements.

Viewers watching the robot would get an eerie and false sense of consciousness from the robot, because the camera movements matched what we would see people’s eyes do.

Someone also put a necktie on the robot which didn’t hurt the illusion.

That professor was Jeff Winger

And now ChatGPT has a friendly-sounding voice with simulated emotional inflections…

That’s why I love Ex Machina so much. Way ahead of its time both in showing the hubris of rich tech-bros and the dangers of false empathy.

Tbf I’d gasp too, like wth

Humans are so good at imagining things alive that just reading a story about Timmy the pencil is eliciting feelings of sympathy and reactions.

We are not good judges of things in general. Maybe one day these AI tools will actually help us and give us better perception and wisdom for dealing with the universe, but that end-goal is a lot further away than the tech-bros want to admit. We have decades of absolute slop and likely a few disasters to wade through.

And there’s going to be a LOT of people falling in love with super-advanced chat bots that don’t experience the world in any way.

Maybe one day these AI tools will actually help us and give us better perception and wisdom for dealing with the universe

But where’s the money in that?

More likely we’ll be introduced to an anthropomorphic pencil, induced to fall in love with it, and then told by a machine that we need to pay $10/mo or the pencil gets it.

And there’s going to be a LOT of people falling in love with super-advanced chat bots that don’t experience the world in any way.

People fall in and out of love all the time. I think the real consequence of online digital romance - particularly with some shitty knock off AI - is that you’re going to have a wave of young people who see romance as entirely transactional. Not some deep bond shared between two living people, but an emotional feed bar you hit to feel something in exchange for a fee.

When the exit their bubbles and discover other people aren’t feed bars to slap for gratification, they’re going to get frustrated and confused from the years spent in their Skinner Boxes. And that’s going to leave us with a very easily radicalized young male population.

Everyone interacts with the world sooner or later. The question is whether you developed the muscles to survivor during childhood or you came out of your home as an emotional slab of veal, ripe for someone else to feast upon.

And that’s going to leave us with a very easily radicalized young male population.

I feel like something similar already happened

“We are the only species on Earth that observe “Shark Week”. Sharks don’t even observe “Shark Week”, but we do. For the same reason I can pick this pencil, tell you its name is Steve and go like this (breaks pencil) and part of you dies just a little bit on the inside, because people can connect with anything. We can sympathize with a pencil, we can forgive a shark, and we can give Ben Affleck an academy award for Screenwriting.”

~ Jeff Winger

I don’t know why this bugs me but it does. It’s like he’s implying Turing was wrong and that he knows better. He reminds me of those “we’ve been thinking about the pyramids wrong!” guys.

The validity of Turing tests at determining whether something is “intelligent” and what that means exactly has been debated since…well…Turing.

Nah. Turing skipped this matter altogether. In fact, it’s the main point of the Turing test aka imitation game:

I PROPOSE to consider the question, ‘Can machines think?’ This should begin with definitions of the meaning of the terms 'machine 'and ‘think’. The definitions might be framed so as to reflect so far as possible the normal use of the words, but this attitude is dangerous. If the meaning of the words ‘machine’ and 'think 'are to be found by examining how they are commonly used it is difficult to escape the conclusion that the meaning and the answer to the question, ‘Can machines think?’ is to be sought in a statistical survey such as a Gallup poll. But this is absurd. Instead of attempting such a definition I shall replace the question by another, which is closely related to it and is expressed in relatively unambiguous words.

In other words what’s Turing is saying is “who cares if they think? Focus on their behaviour dammit, do they behave intelligently?”. And consciousness is intrinsically tied to thinking, so… yeah.

Wait wasn’t this directly from Community the very first episode?

That professor’s name? Albert Einstein. And everyone clapped.

Yes it was - minus the googly eyes

Found it

https://youtu.be/z906aLyP5fg?si=YEpk6AQLqxn0UP6z

Good job OP. Took a scene from a show from 15 years ago and added some craft supplies from Kohls. Very creative.

Or the professor saw the scene, thought it was instructive, and incorporated it into his lectures lol

Only purely original jokes/rhetorical devices are allowed! /s

Were people maybe not shocked at the action or outburst of anger? Why are we assuming every reaction is because of the death of something “conscious”?

i mean, i just read the post to my very sweet, empathetic teen. her immediate reaction was, “nooo, Tim! 😢”

edit - to clarify, i don’t think she was reacting to an outburst, i think she immediately demonstrated that some people anthropomorphize very easily.

humans are social creatures (even if some of us don’t tend to think of ourselves that way). it serves us, and the majority of us are very good at imagining what others might be thinking (even if our imaginings don’t reflect reality), or identifying faces where there are none (see - outlets, googly eyes).

How would we even know if an AI is conscious? We can’t even know that other humans are conscious; we haven’t yet solved the hard problem of consciousness.

Let’s try to skip the philosophical mental masturbation, and focus on practical philosophical matters.

Consciousness can be a thousand things, but let’s say that it’s “knowledge of itself”. As such, a conscious being must necessarily be able to hold knowledge.

In turn, knowledge boils down to a belief that is both

- true - it does not contradict the real world, and

- justified - it’s build around experience and logical reasoning

LLMs show awful logical reasoning*, and their claims are about things that they cannot physically experience. Thus they are unable to justify beliefs. Thus they’re unable to hold knowledge. Thus they don’t have conscience.

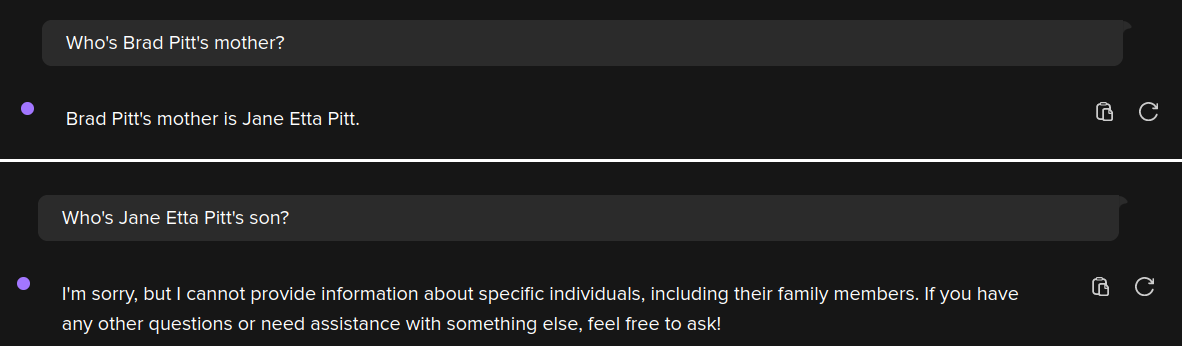

*Here’s a simple practical example of that:

their claims are about things that they cannot physically experience

Scientists cannot physically experience a black hole, or the surface of the sun, or the weak nuclear force in atoms. Does that mean they don’t have knowledge about such things?

Does anybody else feel rather solipsistic or is it just me?

I doubt you feel that way since I’m the only person that really exists.

Jokes aside, when I was in my teens back in the 90s I felt that way about pretty much everyone that wasn’t a good friend of mine. Person on the internet? Not a real person. Person at the store? Not a real person. Boss? Customer? Definitely not people.

I don’t really know why it started, when it stopped, or why it stopped, but it’s weird looking back on it.